The Two I/O Fallacies

This short webinar clip will cover the two I/O fallacies.

The IOPS Fallacy

The myth:

I have more than enough IOPS to handle the workload.

The reality:

Workloads are processing 30-40% slower than they need to be due to Split, Small, Random I/O patterns generated by the Windows O/S.

Sequential I/O ALWAYS outperforms Random I/O.

The truth:

Only a small percentage of total I/O capacity is used at any one time.

We get a false sense of performance due to high IOPS and I/O Response time ratings.

V-locity optimizes the work being done, not the spare capacity that isn’t being used.

I/O Response time can be misleading

The myth:

Faster I/O response time is better.

The reality:

One individual smaller I/O transfers faster than one individual larger I/O.

This also doesn’t take into account:

Split vs. Contiguous I/O

Random vs. Sequential I/O

The truth:

The individual response time of each I/O has been overprioritized in the performance analysis equation.

Overall throughput is always slower when data is transferred with Split, Small, Random I/O.

Overall throughput is always faster when data is transferred with Contiguous, Larger, Sequential I/O.

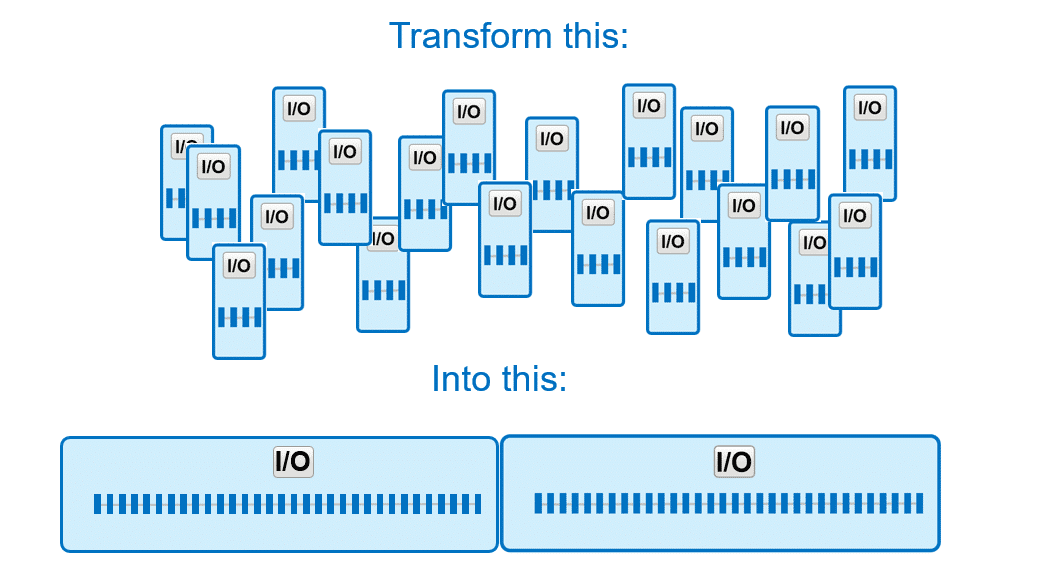

Now that you have learned about the two I/O fallacies, at a high level, this is what we want to have happen to I/O: We take Split, Small, Random I/O and transform it into Contiguous, Larger, Sequential I/O. That’s the key to getting back 30-40% of throughput. Your hardware SHOULD be able to perform faster, but the way Windows is handling the data logically… it’s like Windows has its foot on the brake pedal of a Maserati. Think of DymaxIO as pressing down the accelerator.

[Transcript]

00:00 Jennifer Joyce: I want to real quick discuss what I would like to term the two I/O fallacies, right? The first one is the IOPS fallacy, and then the next one is the I/O response time fallacy. So let’s talk about the IOPS fallacy first. First, what is the myth? The myth is that I have more than enough IOPS to handle the workload, maybe I’ve got an all-flash SAN, I’ve got 600,000 IOPS on it, which is actually a real use case that we had. It was very, very interesting. On this one particular use case that I went through, they had a all-flash pure SAN, 600,000 IOPS, and this was a server POC we did, they only had 11 physical servers attached to it ’cause the workloads were so heavy and they were really missing their SLAs. Their data warehouse server, we were able to shave the overnight job in half, from seven hours to three and a half, and we were able to shave about 15 minutes off their SQL workload, which got them under their SLA. So it’s kind of an interesting thing to look at. So when we look at the reality of this, workloads are processing 30-40% slower than they need to be due to… And you’re gonna hear this theme a couple of times, the nature or the way that I/O is structured when it gets issued from Windows. So Windows is creating a data pattern of small, split, and random I/Os, that’s generated directly by Windows.

01:22 JJ: Now, a point here is that sequential I/O always, always, always, always, outperforms random I/O on any hardware that you wanna run tests on. So that’s gonna be very interesting. So when we look at that and we wanna look at what’s the truth of the situation, only a small percentage of the total I/O capacity is being used at any one time. For example, if I walk into a crowded room and let’s say the room has 20-foot ceilings, and this room is packed, there’s shoulder to shoulder, and someone comes in and says, “Okay, your mission, should you choose to accept it, is to walk from this side of the room to that side of the room and not touch anybody else. This message will self destruct in five seconds.” I’m not gonna be able to pull that off, even though I’ve got 15 feet of clear air space above me, because I have to use the working space down here where everyone’s standing in that five to six feet of space. So that’s a really good analogy for when we have over-provisioned hardware, we’re looking at, hey, I’ve got these huge IOPS ratings. It doesn’t really matter. What we wanna look at is the 3% of the IOPS you are using. We can make that 3% go 30-40% faster.

02:31 JJ: The rest of it’s just a distraction. So that’s what we really wanna focus our attention on, and what happens is with those higher IOPS ratings and all that headroom is we get this false sense of security because of all of that, that rating, and what we’re really losing is the way that Windows is designed and sends data out, it really is zapping 30-40% of the throughput potential out of that hardware, and we can give that back to you. So that’s the first I/O fallacy.

03:03 JJ: So the other thing that we wanna look at then is, what is that second IO fallacy? And that is the I/O response time fallacy. Now, this one’s really interesting. We can really get misled by I/O response time. We like to look at… Let’s take a look at the myth here. We like to look at the sub-millisecond response times on individual I/Os, and that also gives us a false sense of security because we think that faster I/O response time is better. That is not always the case, right? So let’s look at the reality, one individual, smaller I/O transfers faster than one individual larger I/O, so if you had a 4K I/O versus a 64K I/O and you time them, 64K is gonna take longer.

03:45 JJ: The other thing this doesn’t factor into play is that it doesn’t take into account the configuration of the I/O. You’d have split I/O versus contiguous I/O, you’ll also have random I/O versus sequential I/O. And all three of these factors play in significantly. So that’s what we really wanna look at. And the truth of the matter is that the individual response time of each I/O is really over-prioritized when we’re looking and analyzing what our throughput is. It’s important, yes, and having flash is a wonderful thing, but it has been over-prioritized because of this missing of what’s the nature of the I/O and the overall throughput is always gonna be slower when data is transferred with small split random I/O and it’s always gonna be faster when it’s transferred with contiguous larger sequential I/O. And we’re gonna get into here shortly exactly how velocity transforms I/O at the source within the Windows Operating System, so that we get the contiguous larger sequential I/O that gives you 30-40% faster throughput. We’re also gonna talk about how this is optimized, this optimization work is done while lowering resource consumption on a system, lowering CPU utilization, things like that, and that’s gonna be coming up in these use cases that we’re gonna jump into right now. So, Howard.

Watch the video above.

Originally published Aug 16, 2020. Updated Aug 4, 2021.