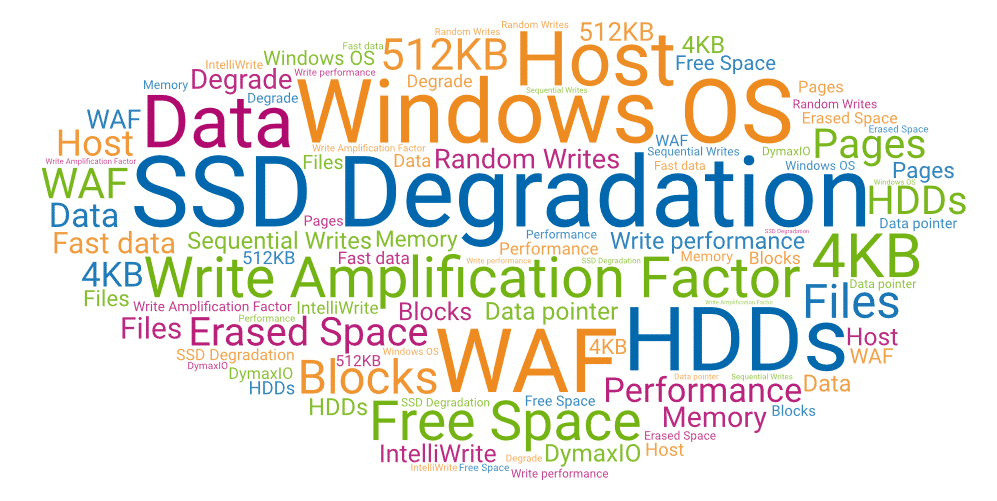

In Part 1, I explained how SSDs can degrade over time and the reason for it was associated with an undesirable SSD phenomenon called the Write Amplification Factor (WAF). This is a numerical value that indicates the actual amount of data that was written to an SSD in relation to the amount of data that was requested to be written from the Host (i.e. Windows OS System)

———data written to the SSD

WAF = ——————————

———data written by the host

This occurs because, unlike HDDs, data cannot be directly overwritten on a disk. On SSDs, data can only be written to erased spaces. When you have a brand new initialized SSD, all the pages are in a free/erased state, so no problem in finding free/erased spaces to write new data. But as the SSD starts to fill up with data, resulting in erased spaces having to be created which causes the WAF to increase. A higher WAF value means SSD performance degrades because more writes have to occur than originally requested.

In Part 2, I am going to explain in more detail why SSDs degrade over time. To do this, I must first define two terms – SSD Pages and Blocks

Pages: This is the smallest unit that can be read/written on an SSD from an application and is usually 4KB in size. So, in this 4KB case, even if the file data is less than 4KB, it still takes a 4KB page to store the data on the SSD. If 5KB of data needs to be written out, then two 4KB pages are needed to contain it.

Blocks: Pages are organized in Blocks. For example, some Blocks are 512KB in size. In this 4KB Page Size and 512KB Page size example, there would be 128 pages per block and the first block on the SSD would contain Page-0 to Page-127, and so on. For example:

Block 0

| Page 0 | Page 1 | Page 2 | Page 3 |

| Page 4 | Page 5 | Page 6 | Page 7 |

:

:

| Page 120 | Page 121 | Page 122 | Page 123 |

| Page 124 | Page 125 | Page 126 | Page 127 |

Block 2

| Page 128 | Page 129 | Page 130 | Page 131 |

| Page 122 | Page 123 | Page 124 | Page 125 |

:

:

| Page 248 | Page 249 | Page 250 | Page 251 |

| Page 252 | Page 253 | Page 254 | Page 255 |

SSD Write performance Degrades Over Time

Now that these terms are defined, lets show how this affects the SSD performance and degradation, specifically Write performance. As indicated before, data cannot be directly overwritten on SSDs. For example, a small piece of existing file data that is already on a page of the SSD needs to be updated, say page 0 of Block 0. There are a few restrictions when writing to an SSD.

- A write to a page can only occur if that page has been first Erased.

In our 4KB example, Data can only be written in 4KB Pages.

- A single page cannot be erased. To erase a page, the whole block needs to be Erased.

In our example, pages can only be erased in 512KB blocks.

If the same page of data needs to be updated and you want to retain the data in the rest of the pages on that block, these steps would need to occur.

1. That whole block needs to be read into memory.

2. The one page needs to be updated in memory.

3. The whole block needs to be erased (Remember that a page can only write to erased pages).

4. The updated block in memory is then written back to the newly erased block.

In this extreme case where all the other pages on this block had to be written back out, the original 4KB Write from the Host machine caused 512KB of data to be written (re-written) to the block. Here, the WAF would be 512KB/4KB = 128. A worst-case scenario. Now, I said this is an extreme case. In most cases:

1. A different page/block that is already erased is found and the updated data is written to the free page/block.

2. The data pointer is mapped to this new page.

3. The previous page is marked ‘stale’, meaning it cannot be written to until erased.

But as an SSD fills up, there are fewer available free pages, so the extra writes and erases to create erased pages/blocks can and will occur and cause SSDs to degrade over time. As indicated before, to keep the WAF low to help your SSDs run like new:

- Keep sufficient contiguous logical free space to enforce Sequential Writes to your SSDs.

- Enforce Sequential Writes rather than Random Writes.

The technology in DymaxIO does this automatically. Download a 30-day trial of DymaxIO

I have been a customer for years and have not had any problems; thank you for the explanation. It makes me a bit concerned when you talk about the 4 step process that occurs when you retain data in the same block. As data is being read from the SSD, updated, block erased, and then the block written; what happens if during the 3rd step the machine looses power? Does data get lost or is there some sort of a built in data transaction guarantee process?

Hi Edward,

Very, very good question. I will have to see how the SSDs handle this. Maybe they use persistent memory and some transaction log to guarantee that the transaction will be completed when power is resumed.

Thanks,

GQ

WAF. In my audio hobby world that stands for “Wife Acceptance Factor” as it relates to the visual appeal of audio speakers or other kit and whether they are allowed in the main living space of the home. That made me smile.

I really enjoy reading about how a SSD works. I have been using your Diskeeper for HDD for years. I purchase my first PC with a SSD few weeks back. I purchase your SSD- Diskeeper Home. I really like my new PC , its super fast and knowing I have SSD-Diskeeper will help keep it running smoothly makes me at ease.

Hi James,

That’s great to hear! Have a great week.

Thanks,

Kellie

hi,

thanks for the great article.

I have a question. isn’t SSD controllers write data in a sequential form by design when there is a free space available ? why I need a software to do so, even the underling controller will ignore any software’s data reallocation -as i read about it-

thanks again

Good question and you are correct that many underlying controllers will cache the small writes and then write it out in a sequential form. This does help, but does not eliminate the issue. If it was just one process and one machine writing out to the SSD, then the SSD could gather them all up and write it in a sequential manner. Just a little more overhead than a sequential write coming in the first place.

The problem is that it is not usually this simple case. There are usually many processes running at the same time, so you are getting all these small write I/Os getting intermixed. Then if you have many systems writing to shared storage, that makes it even worse. So the controller will write out the data in a sequential manner but this sequential write has data I/Os from many different processes (and maybe different machines) because it does not know which of these small I/Os belong together, so this data is getting written to different pages on the SSD which can increase the WAF. I hope this helps.

Kellie

Good question and you are correct that many underlying controllers will cache the small writes and then write it out in a sequential form. This does help, but does not eliminate the issue. If it was just one process and one machine writing out to the SSD, then the SSD could gather them all up and write it in a sequential manner. Just a little more overhead than a sequential write coming in the first place.

The problem is that it is not usually this simple case. There are usually many processes running at the same time, so you are getting all these small write I/Os getting intermixed. Then if you have many systems writing to shared storage, that makes it even worse. So the controller will write out the data in a sequential manner but this sequential write has data I/Os from many different processes (and maybe different machines) because it does not know which of these small I/Os belong together, so this data is getting written to different pages on the SSD which can increase the WAF. I hope this helps.

Gary