If you are reading this article, then most likely you are about to evaluate DymaxIO™ to improve SQL Performance on a SQL Server (or already have our software installed on a few servers) and have some questions about why it is a best practice recommendation to place a memory limit on SQL Servers in order to get the best performance from that server once you’ve installed one of our solutions.

To give our products a fair evaluation, there are certain best practices we recommend you follow. Now, while it is true most servers already have enough memory and need no adjustments or additions, a select group of high I/O, high performance, or high demand servers, may need a little extra care to run at peak performance.

This article is specifically focused on those servers and the best-practice recommendations below for available memory. They are precisely targeted to those “work-horse” servers. So, rest assured you don’t need to worry about adding tons of memory to your environment for all your other servers.

One best practice we won’t dive into here, which will be covered in a separate article, is the idea of deploying our software solutions to other servers that share the workload of the SQL Server, such as App Servers or Web Servers that the data flows through. However, in this article we will shine the spotlight on best practices for SQL Server memory limits.

An Analytical Approach

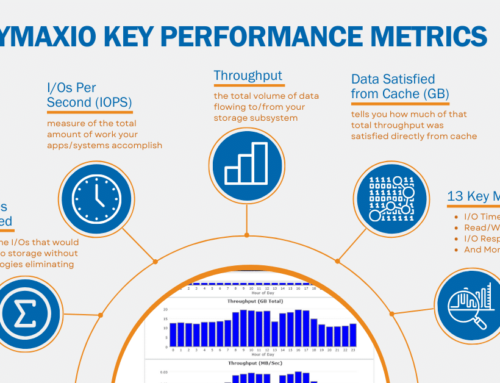

We’ve sold over 100 million licenses in over 30 years of providing Condusiv Technologies patented software. As a result, we take a longer term and more global view of improving performance, especially with the IntelliMemory® caching component that is part of DymaxIO. We care about maximizing overall performance knowing that it will ultimately improve application performance. We have a significant number of different technologies that look for I/Os that we can eliminate out of the stream to the actual storage infrastructure. Some of them look for inefficiencies caused at the file system level. Others take a broader look at the disk level to optimize I/O that wouldn’t normally be visible as performance-robbing. We use an analytical approach to look for I/O reduction that gives the most bang for the buck. This has evolved over the years as technology changes. What hasn’t changed is our global and long-term view of actual application usage of the storage subsystem and maximizing performance, especially in ways that are not obvious.

Our software solutions eliminate I/Os to the storage subsystem that the database engine is not directly concerned with and as a result we can greatly improve the speed of I/Os sent to the storage infrastructure from the database engine. Essentially, we dramatically lessen the number of competing I/Os that slow down the transaction log writes, updates, data bucket reads, etc. If the I/Os that must go to storage anyway aren’t waiting for I/Os from other sources, they complete faster. And, we do all of this with an exceptionally small amount of idle, free, unused resources, which would be hard pressed for anyone to even detect through our self-learning and dynamic nature of allocating and releasing resources depending on other system needs.

SQL Grabs Available Memory

It’s common knowledge that SQL Server has specialized caches for the indexes, transaction logs, etc. At a basic level the SQL Server cache does a good job, but it is also common knowledge that it’s not very efficient. It uses up way too much system memory, is limited in scope of what it caches, and due to the incredible size of today’s data stores and indexes it is not possible to cache everything. In fact, you’ve likely experienced that out of the box, SQL Server will grab onto practically all the available memory allocated to a system.

It is true that if SQL Server memory usage is left uncapped, there typically wouldn’t be enough memory for Condusiv’s software to create a cache with. Hence, why we recommend you place a maximum memory usage in SQL Server to leave enough memory for IntelliMemory cache to help offload more of the I/O traffic. For best results, you can easily cap the amount of memory that SQL Server consumes for its own form of caching or buffering. At the end of this article I have included a link to a Microsoft document on how to set Max Server Memory for SQL as well as a short video to walk you through the steps.

Rule of Thumb

To improve SQL performance, a general rule of thumb for busy SQL database servers would be to limit SQL memory usage to keep at least 16 GB of memory free. This would allow enough room for the IntelliMemory cache to grow and really make that machine’s performance ‘fly’ in most cases. If you can’t spare 16 GB, leave 8 GB. If you can’t afford 8 GB, leave 4 GB free. Even that is enough to make a difference. If you are not comfortable with reducing the SQL Server memory usage, then at least place a maximum value of what it typically uses and add 4-16 GB of additional memory to the system.

We have intentionally designed our software so that it can’t compete for system resources with anything else that is running. This means our software should never trigger a memory starvation situation. IntelliMemory will only use some of the free or idle memory that isn’t being used by anything else, and will dynamically scale our cache up or down, handing memory back to Windows if other processes or applications need it.

Think of our IntelliMemory caching strategy as complementary to what SQL Server caching does, but on a much broader scale. IntelliMemory caching is designed to eliminate the type of storage I/O traffic that tends to slow the storage down the most. While that tends to be the smaller, more random read I/O traffic, there are often times many repetitive I/Os, intermixed with larger I/Os, which wreak havoc and cause storage bandwidth issues. Also keep in mind that I/Os satisfied from memory are 10-15 times faster than going to flash.

Secret Sauce

So, what’s the secret sauce? We use a very lightweight storage filter driver to gather telemetry data. This allows the software to learn useful things like:

- What are the main applications in use on a machine?

- What type of files are being accessed and what type of storage I/O streams are being generated?

- And, at what times of the day, the week, the month, the quarter?

IntelliMemory is aware of the ‘hot blocks’ of data that need to be in the memory cache, and more importantly, when they need to be there. Since we only load data we know you’ll reference in our cache, IntelliMemory is far more efficient in terms of memory usage versus I/O performance gains. We can also use that telemetry data to figure out how best to size the storage I/O packets to give the main application the best performance. If the way you use that machine changes over time, we automatically adapt to those changes, without you having to reconfigure or ‘tweak’ any settings.

Stayed tuned for the next in the series to help improve SQL performance; Thinking Outside The Box Part 2 – Test vs. Real World Workload Evaluation.

Main takeaways:

- Most of the servers in your environment already have enough free and available memory and will need no adjustments of any kind.

- Limit SQL memory so that there is a minimum of 8 GB free for any server with more than 40 GB of memory and a minimum of 6 GB free for any server with 32 GB of memory. If you have the room, leave 16 GB or more memory free for IntelliMemory to use for caching.

- Another best practice is to deploy our software to all Windows servers that interact with the SQL Server. More on this in a future article.

Microsoft Document – Server Memory Server Configuration Options

Short video – Best Practices for Available Memory for DymaxIO (formerly V-locity or Diskeeper)

This video demonstration was performed with the V-locity software. Please note that it is applicable to DymaxIO as well.

At around the 3:00 minute mark, capping SQL Memory is demonstrated.

Leave A Comment

You must be logged in to post a comment.