The #1 request I’ve been getting from customers is a white board video that succinctly explains the two silent killers of VM performance and how our I/O reduction guarantees to solve performance problems, so applications run perfectly on every Windows server.

Expensive backend storage upgrades should ONLY take place when needing more capacity – not more performance. Anytime I tell someone our I/O reduction software guarantees to solve their toughest performance problems…the very first response is invariably the same…HOW? Not only have I answered this question hundreds of times, our own customers find themselves answering this question repeatedly to other team members or new hires.

To make this easier, I’ve answered it all here in this 10-min White Board Video ->, or you can continue reading.

Most of us have been upgrading hardware to get more performance ever since we can remember. It’s become so engrained, it’s often times the ONLY approach we think of when needing a performance upgrade.

For many organizations, they don’t necessarily need a performance boost on EVERY application, but they need it on one or two I/O intensive applications. To throw a new all-flash array or new hybrid array at a performance problem ends up being the most expensive and disruptive way to solve a performance problem when all you have to do is the same thing thousands of our customers have done: simply try our I/O reduction software on any Windows server and watch the application run at least 50% faster and in many cases 2X-10X faster.

Most IT professionals are unaware of the fact that as great as virtualization has been for server efficiency, the one downside is how it adds complexity to the data path. On top of that, Windows doesn’t play well in a virtual environment (or any environment where it is abstracted from the physical layer). This means I/O characteristics that are a lot smaller, more fractured and more random than they need to be – the perfect trifecta for bad storage performance.

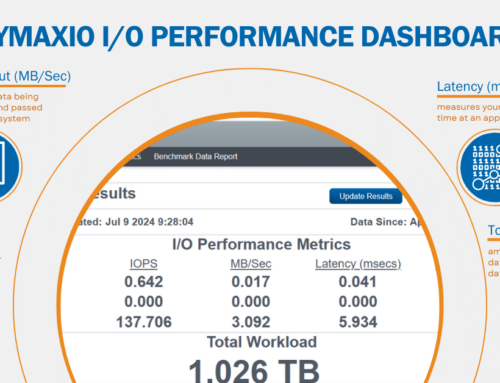

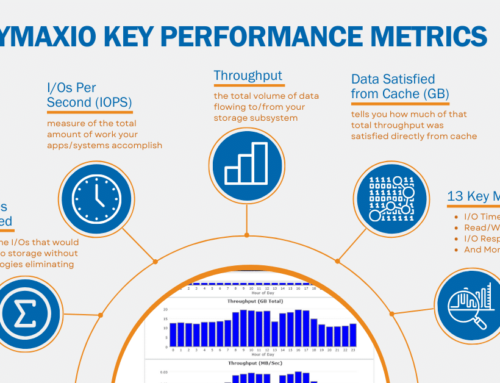

This “death by a thousand cuts” scenario means systems are processing workloads about 50% slower than they should. Condusiv’s I/O reduction software solves this problem by displacing many small tiny writes and reads with large, clean contiguous writes and reads. As huge as that patented engine is for our customers, it’s not the only thing we’re doing to make applications run smoothly. Performance is further electrified by establishing a tier-0 caching strategy – automatically using idle, available memory to serve hot reads. This is the same battle-tested technology that has been OEM’d by some of the largest out there – Dell, Lenovo, HP, SanDisk, Western Digital, just to name a few.

Although we might be most known for our first patented engine that solves Windows write inefficiencies to HDDs or SSDs, more and more customers are discovering just how important our patented DRAM caching engine is. If any customer can maintain even just 4GB of available memory to be used for cache, they most often see cache hit rates in the range of 50%. That means serving data out of DRAM, which is 15X faster than SSD and opens up even more precious bandwidth to and from storage for everything else. Other customers who really need to crank up performance are simply provisioning more memory on those systems and seeing >90% cache hit rates.

See all this and more described in the latest Condusiv I/O Reduction White Board video that explains eeevvvveeerything you need to know about the problem, how we solve it, and the typical results that should be expected in the time it takes you to drink a cup of coffee. So go get a cup of coffee, sit back, relax, and see how we can solve your toughest performance problems – guaranteed.

Leave A Comment

You must be logged in to post a comment.