When reading articles, blogs, and forums posted by well-respected (or at least well intentioned people) on the subject of fragmentation and SSDs, many make statements about how (1) SSDs don’t fragment, or (2) there’s no moving parts, so no problem, or (3) an SSD is so fast, why bother? We all know and agree SSDs shouldn’t be “defragmented” since that shortens lifespan, so is there a problem after all?

The truth of the matter is that applications running on Windows do not talk directly to the storage device. Data is referenced as an abstracted layer of logical clusters rather than physical track/sectors or specific NAND-flash memory cells. Before a storage unit (HDD or SSD) can be recognized by Windows, a file system must be prepared for the volume. This takes place when the volume is formatted and in most cases is set with a 4KB cluster size. The cluster size is the smallest unit of space that can be allocated. Too large of a cluster size results in wasted space due to over allocation for the actual data needed. Too small of a cluster size causes many file extents or fragments. After formatting is complete and when a volume is first written to, most all of the free space is in just one or two very large sections. Over the course of time as files of various sizes are written, modified, re-written, copied, and deleted, the size of individual sections of free space as seen from the NTFS logical file system point of view becomes smaller and smaller. I have seen both HDD and SSD storage devices with over 3 million free space extents. Since Windows lacks file size intelligence when writing a file, it never chooses the best allocation at the logical layer, only the next available – even if the next available is 4KB. That means 128K worth of data could wind up with 32 extents or fragments, each being 4KB in size. Therefore SSDs do fragment at the logical Windows NTFS file system level. This happens not as a function of the storage media, but of the design of the file system.

Let’s examine how this impacts performance. Each extent of a file requires its own separate I/O request. In the example above, that means 32 I/O operations for a file that could have taken a single I/O if Windows was smarter about managing free space and finding the best logical clusters instead of the next available. Since I/O takes a measurable amount of time to complete, the issue we’re talking about here related to SSDs has to do with an I/O overhead issue.

Even with no moving parts and multi-channel I/O capability, the more I/O requests needed to complete a given workload, the longer it is going to take your SSD to access the data. This performance loss occurs on initial file creation and carries forward with each subsequent read of the same data. But wait… the performance loss doesn’t stop there. Once data is written to a memory cell on an SSD and later the file space is marked for deletion, it must first be erased before new data can be written to that memory cell. This is a rather time consuming process and individual memory cells cannot be individually erased, but instead a group of adjacent memory cells (referred to as a page) are processed together. Unfortunately, some of those memory cells may still contain valuable data and this information must first be copied to a different set of memory cells before the memory cell page (group of memory cells) can be erased and made ready to accept the new data. This is known as Write Amplification. This is one of the reasons why writes are so much slower than reads on an SSD. Another unique problem associated with SSDs is that each memory cell has a limited number of times that a memory cell can be written to before that memory cell is no longer usable. When too many memory cells are considered invalid the whole unit becomes unusable. While TRIM, wear leveling technologies, and garbage collection routines have been developed to help with this behavior, they are not able to run in real-time and therefore are only playing catch-up instead of being focused on the kind of preventative measures that are needed the most. In fact, these advanced technologies offered by SSD manufacturers (and within Windows) do not prevent or reverse the effects of file and free space fragmentation at the NTFS file system level.

The only way to eliminate this surplus of small, tiny writes and reads that (1) chew up performance and (2) shorten lifespan from all the wear and tear is by taking a preventative approach that makes Windows “smarter” about how it writes files and manages free space, so more payload is delivered with every I/O operation. That’s exactly why more users run Condusiv’s Diskeeper® (for physical servers and workstations) or V-locity® (for virtual servers) on systems with SSD storage. (Diskeeper, SSDkeeper, and V-locity are now DymaxIO™) For anyone who questions how much value this approach adds to their systems, the easiest way to find out is by downloading a free 30-day trial and watch the “time saved” dashboard for yourself. Since the fastest I/O is the one you don’t have to write, Condusiv software understands exactly how much time is saved by eliminating multiple, fractured writes with fewer, larger contiguous writes. It even has an additional feature to cache reads from idle, available DRAM (15X faster than SSD), which further offloads I/O bandwidth to SSD storage. Especially for businesses with many users accessing a multitude of applications across hundreds or thousands of servers, the time savings are enormous.

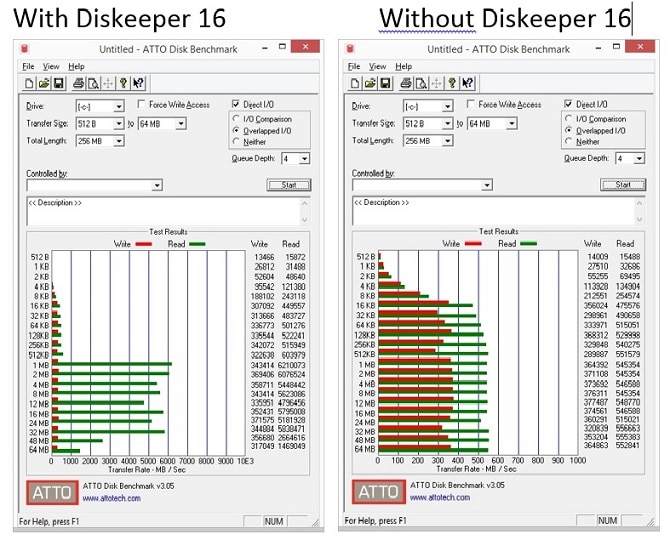

ATTO Benchmark Results with and without Diskeeper 16 running on a 120GB Samsung SSD Pro 840. The read data caching shows a 10X improvement in read performance.

Leave A Comment

You must be logged in to post a comment.